- Home

- Sorting algorithms

- Insertion sort

Insertion sort

Background

Insertion sort is one of the most efficient among the O(n2) sorting algorithms. It has a worst and average time complexity of O(n2). Its best case is when the input is already sorted. This results in linear complexity O(n). It is stable and adaptive. It is one of the most efficient algorithms for sorting a small data set. For that reason, some divide-and-conquer algorithms use it in their final phase, when the input is divided to smaller parts (0 < n < 10 to 20).

Advertise on this site. I promise you will like the rates :)

It has one interesting and very rare feature – it is “online”. This means that the array (or list) can arrive one item at a time and still be sorted correctly. This is very useful if we want to add new elements to an already sorted array.

Algorithm

Insertion sort makes n-1 passes. Unlike the bubble sort and the selection sort, the pass is done through the sorted part of the array.

- The first pass takes the second element. It is compared to the first element.

- If they are in the correct order – we can continue with the next pass.

- If they are in the wrong order - the value of the second element is saved in a temporary variable, then the first element is shifted to second position and the saved value in the temporary variable is written in first position. - Now the first two elements are sorted in relation to each other. The second pass takes the third element and compares it to the second.

- If they are in the correct order – continue with the next pass.

- If they are in the wrong order we continue to compare the current element(the third) with the previous elements until we find its correct place. Then we save the current value, shift all elements that need to be after it and insert the element to its correct location. - This repeats until all numbers are inserted to their correct place.

As always, we will make a detailed example to apply the new algorithm:

Example

Sort the numbers ( -3, 4, 0, 15, 7, 1) using insertion sort. In this example, the bold numbers are the ones that we are inserting the current step.

Pass 1: -3, 4, 0, 15, 7, 1

1. 4 < -3? No

Pass 2: -3, 4, 0, 15, 7, 1

1. 0 < 4? Yes

2. 0 < -3? No => Shift 4, Move 0.

Pass 3: -3, 0, 4, 15, 7, 1

1. 15 < 4? No

Pass 4: -3, 0, 4, 15, 7, 1

1. 7 < 15? Yes

2. 7 < 4? No => Shift 15, Move 7

Pass 5: -3, 0, 4, 7, 15, 1

1. 1 < 15? Yes

2. 1 < 7? Yes

3. 1 < 4? Yes

4. 1 < 0? No => Shift 4, 7, 15, Move 1

-3, 0, 1, 4, 7, 15

As seen (in pass 3), the algorithm very easily recognizes if the element is already in the correct place. This means it is adaptive and its best case completes in linear time O(n) if the input data comes sorted. In this case there would be n comparisons and 0 swaps/shifts.

Implementation

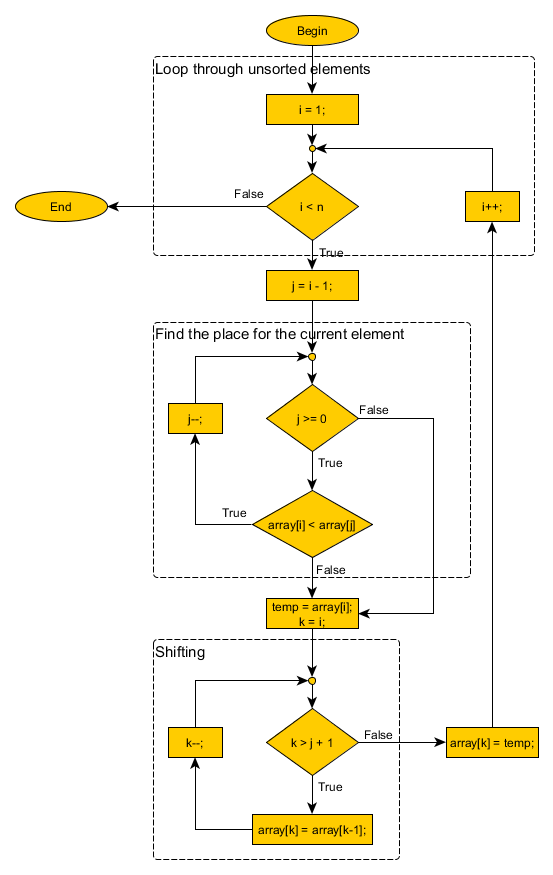

For the implementation of insertion sort we need two loops. The first will iterate through the unsorted part of the array. For each unsorted element the second loop will go through the sorted part and search for its correct location. This means that we need to nest the second loop into the first. After we find the correct place to insert the current element, we need to shift all remaining elements. For that purpose we need one more loop.

*A simpler implementation exists that uses swaps instead of insertion and shifts. It could be useful when swapping is fast(if the elements are integer numbers), but it becomes very slow if we need to swap objects.

How much time the insertion sort costs?

If “n” is the total number of elements and “m” is the number of sorted elements in the current pass.

For each of the “n” passes it makes:

- on average m/2 comparisons. In the worst case this will be equal to n/2. So we have (n passes) * (n / 2) comparisons. => O(n2) comparisons.

- m / 2 number of shifts, in the worst case – n /2. => O(n2) shifts.

As you see, the number of actions are divided by the factor of 2. At the same time the algorithm is adaptive (it takes advantage of pre-sorted elements) and has better average efficiency then bubble sort and selection sort.

But still – in the worst case both comparisons and shifts have O(n2). This doesn't look too efficient, does it?

Can we optimize this? Let's see...

Reducing the comparisons

Binary insertion sort

Since all the comparisons are done in the sorted part of the array, we can use binary search to find the correct place for the current item. Binary search has a time complexity of O(log2n), which is really better than linear search. This helps to reduce the number of comparisons from n to log2n in one pass or n*log2n for the whole sorting.

Important! Binary search has logarithmic time complexity only if the access to the elements is constant time. This is the case with normal arrays, where indexing requires indeed constant time.

Other data structures, like linked list will most likely require linear time to access an element at random position. In this case the binary search will go to O(n*log2n), which is worse than linear search.

Reducing the shifts/swaps

Sorting linked list

We have just mentioned that data structures like linked lists can't benefit from binary search to reduce the number of comparisons. This is because of their structure. The question here is - “Can we use their structure to our advantage?”. The answer is “Yes”.

Linked lists use pointers to access the next element. This makes it very easy to make a real insertion of the element to its correct place. After we found the correct place for the current item, we just need to insert it to the right place and remove it from the old place. That's all, no shifting is needed.

This reduces the shifting/swapping time from linear to constant! So in result, when sorting linked lists we have only O(n) swapping complexity. However, the comparisons still have that quadratic count.

Note that there are data structures that provide both fast insertion and random item access in constant time. This combines the two optimizations. For such structures insertion sort has O(n * log2n) time complexity and still O(1) space complexity! Such data types are for example skip lists, or modified linked lists. The latter uses a pair of two pointers(one to the value, and one to the next element) instead of a value and a pointer.

Library sort (Gapped insertion sort)

What was the main problem with insertion sort, when sorting an array? Yup, the high number of shifts or swaps that we need to do every time we insert a new element.

Library sort is a successful attempt to correct this problem. It makes a “normalization” to the sorted elements, by making gaps between them. The gaps are empty elements. When we insert the next item, we don't need to shift.

After every insertion, the normalization is repeated, so that we can use the fast insertion again.

The computations to prove the complexity here are rather complex ;-), but if you are interested, look here: presentation about library sort and also here: analysis and explanation of library sort.

In conclusion: The overall cost of library sort is O(n * log2n) with high probability. Note that it requires additional space for the gaps.

Summary

Insertion sort:

- is stable

- is adaptive

- is online - can sort the data as it receives it

- is easy to implement

- has constant space complexity O(1)

- is one of the most efficient algorithms to sort a small number of items

- can be optimized to do only n*log2n comparisons if access time is constant

- can be optimized to do only n swaps when sorting data structures like linked list

- can run in O(n*log2n) time if applied to specific data structures

- is a base for library sort, which can run in O(n*log2n) time complexity

- is used in practice to complement algorithms like quicksort, shell sort

- Home

- Sorting algorithms

- Insertion sort